Do LLMs Model Spoken Dialogue Accurately?

Transformer-based Large Language Models (LLMs) have shown impressive performance in various language tasks, but their ability to predict spoken language in natural interactions remains uncertain. Spoken and written languages differ significantly in syntax, pragmatics, and norms. While LLMs understand linguistic rules statistically, they may not grasp the normative structure of interactive spoken language. This study evaluates LLMs, specifically GPT-2 variants, on spoken dialogue. Fine-tuning on English dialogue transcripts, we assessed whether LLMs consider speaker identity in predictions. Although models used speaker identity, they occasionally hallucinated transitions for plausibility. Thus, LLMs generate norm-conforming text but do not yet replicate human conversational behavior accurately.

Project Dates: 6/10/22 - Present

Date Published: 6/23/24

Overview

As the primary developer for this project, I have utilized various advanced tools, including PyTorch, PyTorch Lightning, Hydra, and Weights and Biases. Due to the ongoing development of a research paper, the code repository is currently not available for public release. I have been supported in this project by J.P de Ruiter, the Project Principal Investigator (PI); Julia Mertens, the first author and project lead; and Lena Warnke, the third author.

Introduction

Over the past few years, Generative Pre-trained Transformers (GPT) have made headlines for their uncanny ability to produce human-like written language. One notable example was a blog post that topped Hacker News, only for the author to later reveal it was written by GPT-3. Such successes have sparked fears about GPT-3 being used to mass-produce misinformation. A recent study even found that readers couldn’t distinguish between human-written news articles and those written by GPT-2. In fact, some readers found GPT-2’s articles more credible than those written by humans.

All of this underscores the impressive progress GPT and other language models have made in modeling and simulating written language. However, there has been relatively little progress in developing language models that can accurately simulate spoken language. Achieving this would bring numerous benefits. Conversational AI agents with more naturalistic speech would be easier to use, understand, and trust. Language models could assist psycholinguists with stimulus norming, ensuring that stimuli are predictably surprising. They could even help create stimuli, a process that typically requires extensive time and creativity. These are just a few ways that language models capable of replicating human dialogue would be valuable.

Despite the potential, there is currently no evidence that state-of-the-art language models, trained on written language scraped from websites like Reddit, can quickly learn to use language in dialogue effectively.

In our project, we implemented two versions of GPT-2—one sensitive to speaker identity and the other ignorant of it. We fine-tuned these models on high-quality, internally recorded spoken language data. We then generated conditional probabilities for specially recorded stimuli designed to make sense when spoken by a particular speaker, with both congruent and incongruent conditions. If the model captures the structure of dialogue, we expect its output probabilities to align with the stimuli conditions.

By exploring these models’ ability to handle spoken language, we aim to take a step closer to creating more sophisticated and accurate conversational AI.

Models

GPT-2 is a transformer-based language model pre-trained on extensive written text datasets. It uses word and positional embeddings to predict the next word in a sequence. TurnGPT is an enhanced version of GPT-2 that includes explicit speaker embeddings, which provide the model with information about who is speaking at each point in the dialogue.

Fine-tuning

The models were fine-tuned on the In Conversation Corpus (ICC), which contains high-quality transcripts of naturalistic conversations. Two datasets were used for this purpose: one with five conversations to investigate the impact of limited fine-tuning data, and another with twenty-eight conversations to assess the benefits of additional fine-tuning data. The fine-tuning process involved adjusting the pre-trained models using the spoken dialogue transcripts to help them learn patterns and dependencies specific to spoken language.

Experimental Setup

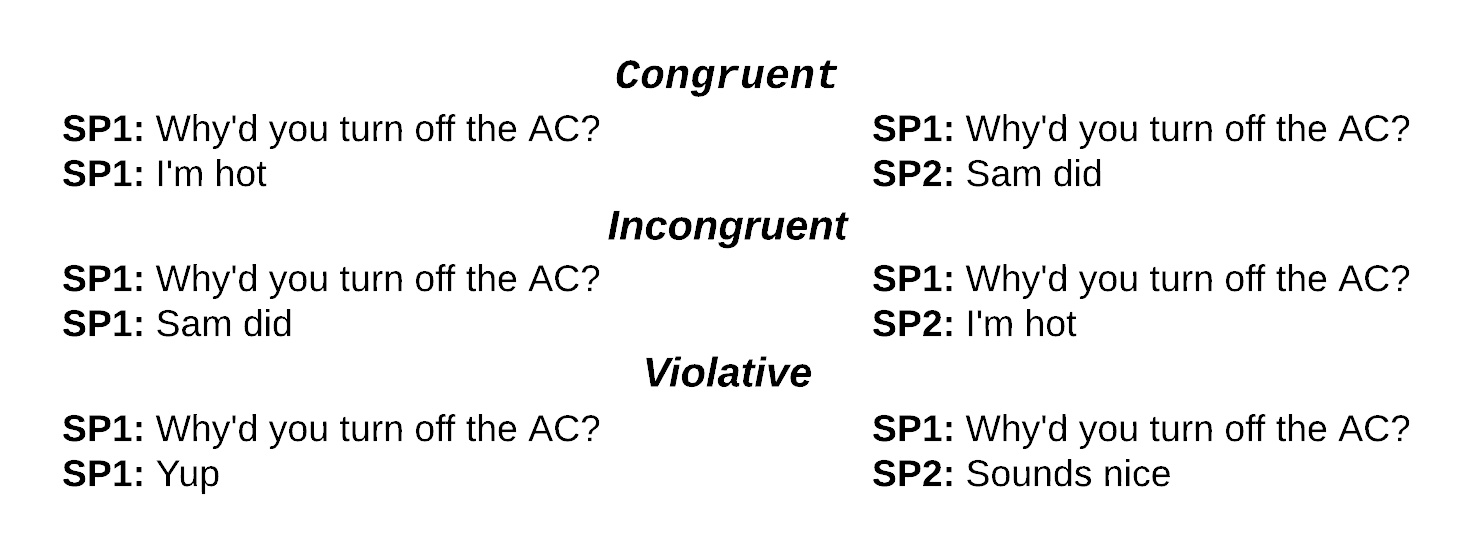

The study used stimuli from Warnke & de Ruiter (2022), which consisted of two-turn sequences where the second turn varied by speaker identity and plausibility. The conditions included:

- Congruent: Turns that fit naturally given the preceding context and speaker identity.

- Incongruent: Turns that do not fit naturally given the speaker identity.

- Violative: Turns that are implausible regardless of the speaker.

The study measured surprisal, the negative log probability of a word indicating the cognitive load or surprise experienced by the model when predicting a word, and offset, the time difference between the end of a turn and when a human participant pressed a button to indicate they anticipated the end of the turn.

\[\begin{align*} \textit{Second Turn Surprisal} &= \sum_{i=1}^{N} - log P(w_i^{2} | w_1^{2}, ... w_{i-1}^{2}, w_1^{1}, ... w_K^{1}) \label{eq: turn_surprisal} \end{align*}\]Results

Effect of Congruence and Speaker

In the different-speaker condition, fine-tuned models showed higher surprisal for incongruent turns compared to congruent turns, aligning with human responses. This suggests that the models learned to use speaker identity to some extent. However, in the same-speaker condition, the models exhibited higher surprisal for congruent turns, indicating potential issues with handling continuous speech from the same speaker.

Effect of Fine-tuning Data

Models fine-tuned on five and twenty-eight conversations exhibited higher overall surprisal than the pre-trained-only model. This indicates that fine-tuning helps models better handle spoken dialogue. The increase in surprisal for incongruent stimuli was more pronounced, showing that fine-tuning improved the models’ ability to distinguish between congruent and incongruent turns. However, the gains diminished as the amount of fine-tuning data increased, suggesting that a smaller amount of high-quality data might suffice.

Explicit vs. Implicit Speaker Representation

TurnGPT, with explicit speaker embeddings, produced lower overall surprisal values and handled same-speaker conditions better than GPT-2. This suggests that explicit speaker information helps the model better understand dialogue dynamics. TurnGPT showed less surprisal for same-speaker conditions, indicating improved processing of speaker identity compared to GPT-2.

Predicting Human Response Times

Surprisal was negatively correlated with human response times, contrary to the initial hypothesis. Higher surprisal led to earlier responses from human participants. Further qualitative analysis suggested that participants might wait longer for turns that project additional talk, affecting response times and indicating a complex interaction between surprisal and turn-taking behavior.

Discussion

The study found that LLMs, even when fine-tuned on spoken dialogue, do not fully replicate human-like predictions. They can use speaker identity to some extent, particularly in different-speaker conditions, but struggle with same-speaker conditions. Fine-tuning improves performance, but the gains diminish with more data. TurnGPT’s explicit speaker embeddings show potential but do not completely solve the problem.

These findings suggest that current LLMs are not yet suitable for fully modeling spoken dialogue dynamics. More targeted training approaches, such as pre-training on spoken dialogue data and developing more effective fine-tuning strategies, are necessary to enhance LLMs’ capabilities in handling the complexities of spoken language interaction.

Conclusion

LLMs trained primarily on written monologue data have limited ability to predict spoken dialogue accurately. While fine-tuning on spoken dialogue improves performance, significant challenges remain. Further improvements in training strategies and pre-training on spoken dialogue data are needed to enable LLMs to handle the unique dynamics of spoken language interactions effectively.