GailBot

Researchers rely on detailed transcriptions of language, including paralinguistic features like overlaps, prosody, and intonation, which are time-consuming to create manually. No current Speech to Text (STT) systems integrate these features. GailBot, a new program, combines STT services with plugins to generate transcripts that follow Conversation Analysis standards. It allows adding and improving plugins for additional features. GailBot's architecture, heuristics, and machine learning are described, showing its improvement over existing transcription software.

Project Dates: 8/1/18 - Present

Date Published: 6/23/24

Overview

GailBot, developed by the Human Interaction Lab at Tufts University, advances dialogue systems for human-robot interaction. It features algorithms for seamless turn construction, silences classification based on duration, detection of overlapping speech using timing and speaker identity, estimation of syllable rate, and neural network-based laughter detection. These innovations aim to enhance naturalistic interactions, contributing to fields like artificial intelligence and machine learning. Explore our Human Interaction Lab for research details and visit the project package page. Note that the code repository for this project is private, but we can share more details upon request.

Please fill out this form to request access to GailBot and join our slack community.

Introduction

Researchers in fields like conversation analysis, psychology, and linguistics require detailed transcriptions of language use, including paralinguistic features such as overlaps, prosody, and intonation. Manual transcription is time-consuming and there are no existing Speech to Text (STT) systems that integrate these features. GailBot, a program developed to address this, combines STT services with plugins to automatically generate first drafts of transcripts adhering to Conversation Analysis standards. GailBot also allows researchers to add new plugins or improve existing ones. The paper describes GailBot’s architecture, use of computational heuristics, machine learning, and evaluates its output compared to human transcribers and other automated systems. Despite limitations, GailBot represents a significant improvement in dialogue transcription software.

Architecture and Algorithms

Architecture

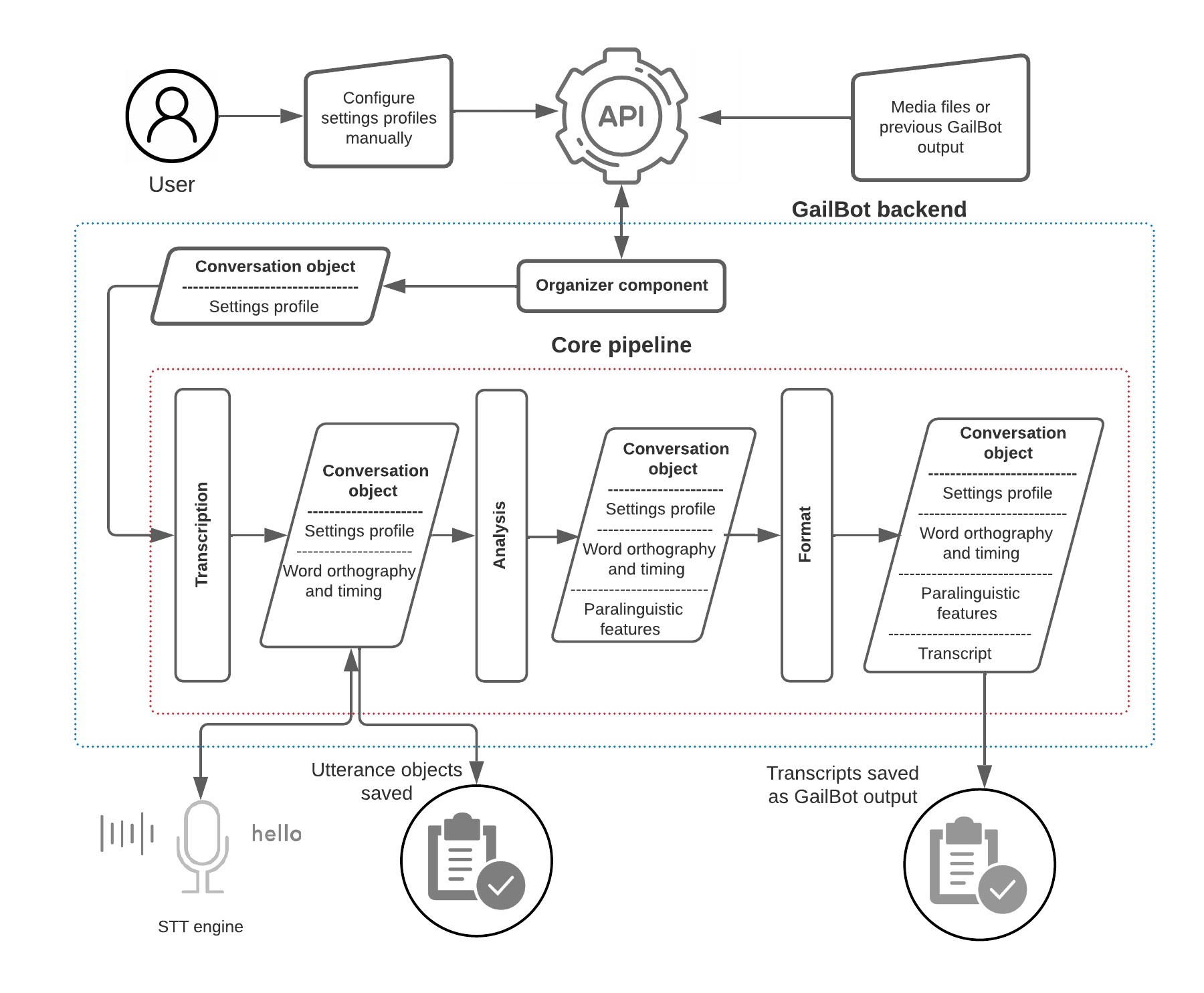

GailBot’s architecture includes an API with two main components: an organizer and a core pipeline. The organizer handles media files and previous GailBot outputs, creating conversation objects with settings profiles. The core pipeline processes these objects in sequential steps: transcription, analysis, and formatting into CA transcripts.

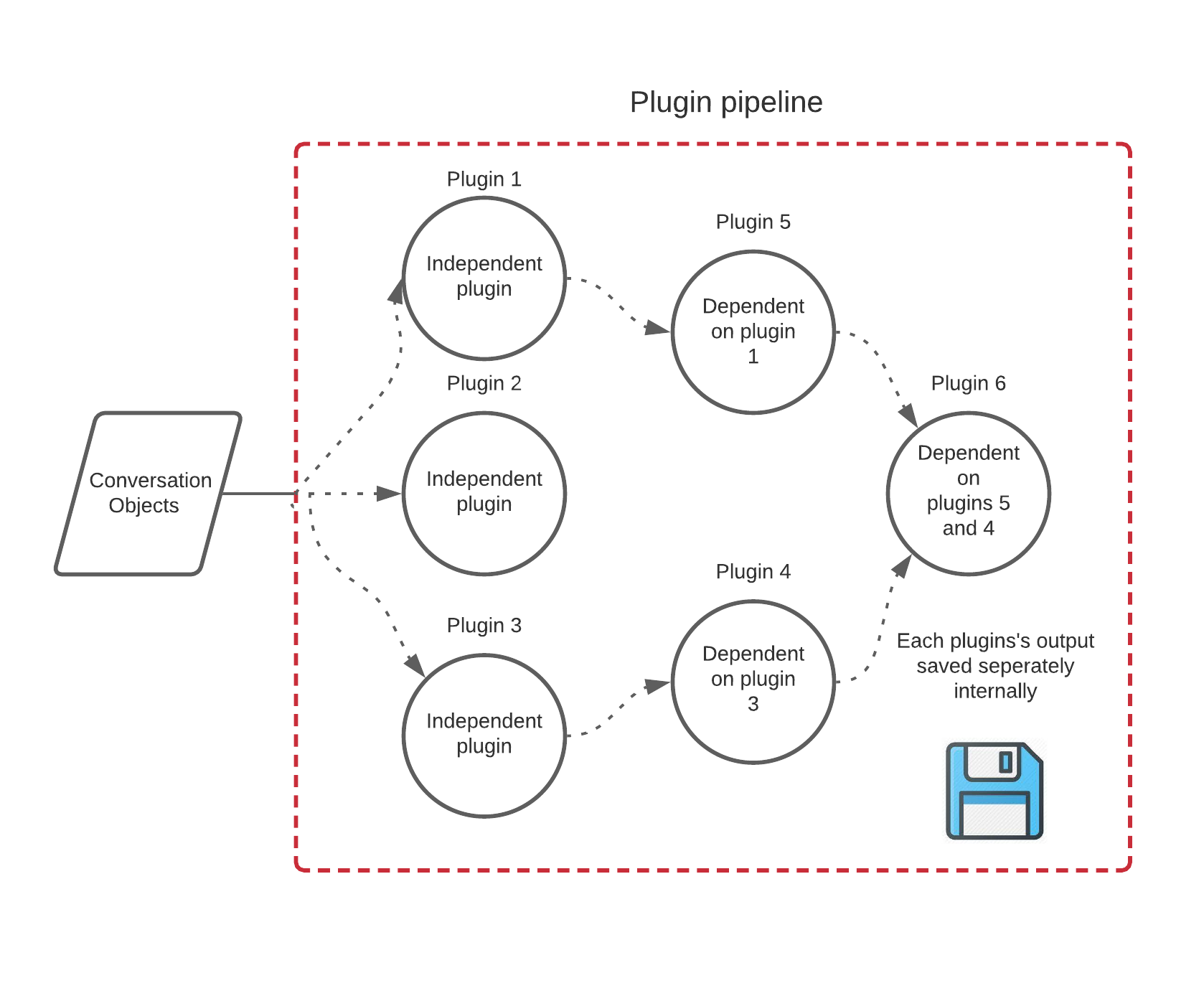

Plugins

Plugins are customizable algorithms that identify specific paralinguistic features. They interact with the core pipeline and can be configured and extended by users.

Algorithms

| Algorithm Name | Description |

|---|---|

| Turn Construction | Identifies turn transitions using speaker changes. |

| Silences | Classifies silences as micropause, pause, or gap based on duration. |

| Overlapping Speech | Detects overlaps using speaker identity and utterance timing. |

| Syllable Rate | Estimates syllable rate and identifies fast or slow speech using median absolute deviation. |

| Laughter Detection | Uses a neural network to identify laughter segments. |

Performance

GailBot’s performance was evaluated on various corpora with different audio qualities and compared to human transcriptions and other automated systems. Key metrics included Word Error Rate (WER), overlap and silence accuracy, and transcription time savings. GailBot was found to significantly reduce transcription time and produce useful first draft CA transcripts, although it has limitations in speaker diarization and identifying exact overlap markers and silence durations.

Discussion

GailBot provides an extensible framework for transcribing paralinguistic features and generating draft CA transcripts. Future improvements may include data-driven models and addressing biases in ASR systems. GailBot facilitates the creation of large-scale CA corpora, enabling new research opportunities across social and computational sciences.

Acknowledgements

The development of GailBot was supported by AFOSR grant FA9550-18-1-0465, the School of Arts & Sciences, and the School of Engineering at Tufts University.

I extend my deepest gratitude to our main collaborators for their integral contributions to GailBot’s development. As the lead developer at Tufts University, I focused on enhancing naturalistic turn-taking in dialogue systems. Dr. Julia Mertens’ insights into communication dynamics, Dr. Saul Albert’s pioneering work in conversation analysis and AI, and J.P. De Ruiter’s guidance as Principal Investigator have been instrumental. Their expertise has shaped GailBot into a versatile research tool.

Undergraduate Contributors

I extend my heartfelt gratitude to all the undergraduate contributors who have been instrumental in the development of GailBot. Your dedication, creativity, and hard work have significantly enriched our project, from refining the application’s functionalities to implementing cutting-edge features. Each of you has played a crucial role in advancing our research goals, and your contributions will undoubtedly leave a lasting impact on the field of human-robot interaction and dialogue systems. Thank you for your commitment and invaluable efforts throughout this journey.

| Student Name | Dates |

|---|---|

| Vivian (Yike) Li | Fall 2022, Spring 2023, Spring 2024 |

| Hannah Shader | Fall 2023, Spring 2024, Summer 2024 |

| Sophie Clemens | Summer 2024 |

| Daniel Bergen | Summer 2024 |

| Eva Caro | Summer 2024 |

| Marti Zentmaier | Summer 2024 |

| Erin Sarlak | Spring 2024 |

| Joanne Fan | Spring 2024 |

| Riddhi Sahni | Spring 2024 |

| Lakshita Jain | Fall 2023 |

| Anya Bhatia | Fall 2023 |

| Jason Wu | Summer 2023 |

| Jacob Boyar | Summer 2023 |

| Siara Small | Fall 2022, Spring 2023 |

| Annika Tanner | Spring 2022 |

| Muyin Yao | Spring 2022 |

| Rosanna Vitiello | Spring 2021 |

| Eva Denman | Spring 2021 |