LLMs know what to say but not when to speak

Turn-taking is crucial for smooth communication, yet LLMs often fail in natural conversations due to their training on written language and focus on turn-final TRPs. This paper evaluates LLMs' ability to predict within-turn TRPs, essential for natural dialogue. Using a unique dataset of participant-labeled within-turn TRPs, we assess LLMs' prediction accuracy. Results highlight LLMs' limitations in modeling spoken language dynamics, guiding the development of more naturalistic spoken dialogue systems.

Project Dates: 5/10/24 - 6/15/24

Date Published: 6/23/24

NOTE: This work was accepted for publication in the Findings of the Empirical Methods for Natural Language Processing (EMNLP) 2024. While the official proceedings are not currently available, the arXiv pre-print is.

Introduction

Smooth turn-taking is essential for effective communication. In everyday conversations, people naturally avoid speaking simultaneously and take turns to speak and listen. This process relies on recognizing and predicting Transition Relevance Places (TRPs), points in the speaker’s utterance where a listener could take over. TRPs are crucial for maintaining conversational flow and ensuring mutual understanding. They allow for seamless transitions between speakers and are marked by brief feedback signals, like “hm-mm,” produced at these points. The ability to predict TRPs depends on understanding lexico-syntactic, contextual, and intonational cues. This skill is vital for both human conversationalists and artificial conversational agents, enabling them to take turns and provide feedback at appropriate moments.

Recent advances in Large Language Models (LLMs) have led to efforts to improve turn-taking in Spoken Dialogue Systems (SDS). Models like TurnGPT and RC-TurnGPT introduce probabilistic models to predict TRPs using linguistic knowledge. However, these models often struggle with unscripted interactions, resulting in long silences or poorly timed feedback. This limitation is due to the optimistic assumption that LLMs, primarily trained on written language, can generalize to spoken dialogue.

To address these issues, we collected a unique dataset of human responses to identify within-turn TRPs in natural conversations. This dataset allows us to evaluate how well current LLMs can predict these TRPs. The goal is to enable dialogue systems to initiate turns and provide feedback with correct, human-like timing. Specifically, our contributions in this work are:

- Empirical Dataset Creation: We created the In Conversation Corpus (ICC) containing high-quality recordings of natural informal dialogues in American English, including 17 selected conversations (425 minutes of talk) used for this study.

- Human Response Collection: We obtained instinctive localization of within-turn TRPs from 118 native English speakers, providing a rich dataset with an average of 159 responses per stimulus turn. The audio for these responses is publicly available here.

- Task Formalization: We formalized the within-turn TRP prediction task, defining it in terms of discrete intervals and establishing evaluation metrics.

- Evaluation of LLMs: We evaluated the performance of state-of-the-art LLMs under different prompting conditions, highlighting the models’ shortcomings and the need for better training on spoken dialogue data.

Data Collection

We created the In Conversation Corpus (ICC), containing high-quality recordings of natural informal dialogues in American English. The ICC includes recordings of 93 conversations, each approximately 25 minutes long, featuring pairs of undergraduate students engaging in free, unscripted conversations. From this corpus, we selected 17 conversations (approximately 425 minutes of talk) for our study.

To obtain participants’ instinctive localization of within-turn TRPs, we selected 55 turns (28.33 minutes of talk) that each contained at least two Turn Constructional Units (TCUs). We had 118 native English speakers listen to these turns and verbalize brief backchannels (e.g., “hm-mm,” “yes”) at points they perceived as TRPs. This provided a rich dataset, with an average of 159 responses per stimulus turn, allowing us to estimate both the probability of TRP occurrence and the distribution of their estimated locations.

Details of Stimulus Lists

Participants were presented with two different lists of stimulus turns, along with their reversed versions to counterbalance order effects. Each list contained multiple stimulus turns:

| List 1 | List 2 | |

|---|---|---|

| List duration (sec) | 846.33 | 853.47 |

| Number of words | 2558 | 2693 |

| Number of participants | 60 | 58 |

| Number of stimuli | 28 | 27 |

| Avg. stimulus duration (sec) | 30.48 | 31.67 |

| Words per stimulus | 91.3 | 99.7 |

| Avg. responses per stimulus | 156 | 162 |

Within-Turn TRP Prediction Task

The task involves predicting TRPs within a turn based on prefixes of the speaker’s utterance. We formalized the task by discretizing the data, mapping each word’s midpoint to identify intervals where TRPs might occur. Each interval’s TRP status is determined by the proportion of participant responses, with a threshold (τ = 0.3) indicating significant agreement. We used this data to train and evaluate LLMs’ ability to predict TRPs, defining the prediction task as determining TRPs for each prefix in a stimulus sequence.

Task Formalization

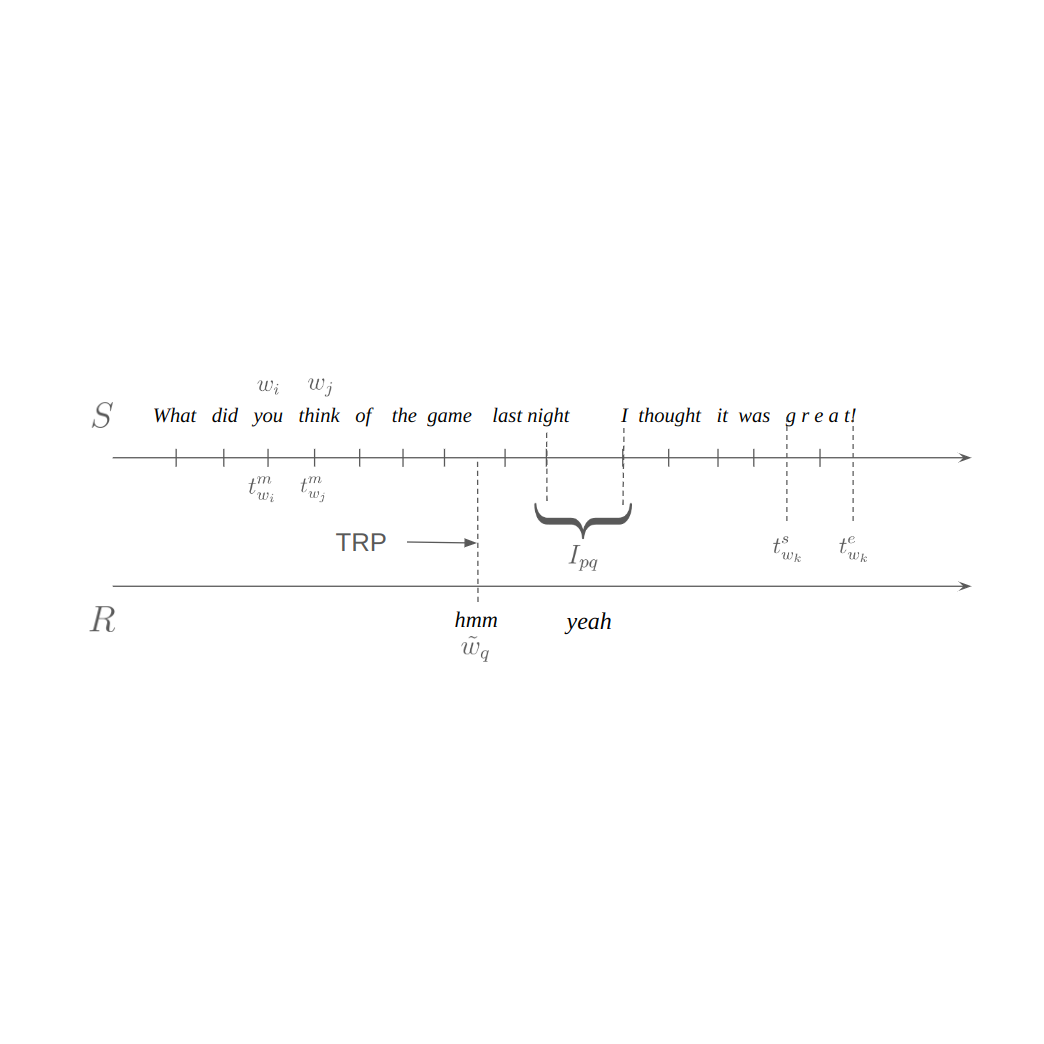

We define a single stimulus ( S ) as a sequence of words \(( w_{i} )\) with their start \(( t_{w_{i}}^s )\) and end \(( t_{w_{i}}^e )\) times:

\[S = \langle (w_{1}, t_{w_{1}}^s, t_{w_{1}}^e), (w_{2}, t_{w_{2}}^s, t_{w_{2}}^e), \ldots, (w_{N}, t_{w_{N}}^s, t_{w_{N}}^e) \rangle\]Similarly, participant responses are defined as:

\[R = \langle (\tilde{w}_{1}, t_{\tilde{w}_{1}}^s, t_{\tilde{w}_{1}}^e), (\tilde{w}_{2}, t_{\tilde{w}_{2}}^s, t_{\tilde{w}_{2}}^e), \ldots, (\tilde{w}_{M}, t_{\tilde{w}_{M}}^s, t_{\tilde{w}_{M}}^e) \rangle\]We use ELAN to manually annotate each word and its timing information for both the stimulus and response audios. This precise timing helps avoid misclassifying non-verbal sounds as responses. The temporal midpoint of words \(( t_{w_{i}}^m )\) is used to create intervals \(( I_{ij} )\) between words:

\[t_{w_{i}}^m = (t_{w_{i}}^s + t_{w_{i}}^e) / 2\] \[I_{ij}, 1 \leq i,j \leq N, j = i+1\]We then determine the proportion of participant responses \(( t_{\tilde{w}_{q}}^s )\) within each interval \(( I_{ij} )\). A TRP occurs in an interval if the proportion of responses exceeds the threshold \(( \tau )\):

\[I_{ij}^{Proportion} > \tau\]A TRP for an interval is defined as:

\[T_{i} \in \{0,1\}\]indicating the occurrence (1) or lack thereof (0) of a TRP. We define \(( \mathcal{T}_{R, S}^{Participants} )\) as the binary indicator of TRPs based on participant responses. The task is to predict \(( \mathcal{T}_{R, S}^{Predicted} )\):

\[\mathcal{T}_{R, S}^{Predicted}\]where each \(( T_{i} )\) occurs after each of the prefixes \(( P_{i} )\):

\[P_{i} = \langle w_{1}, w_{2}, \ldots, w_{i} \rangle\]The prediction task is formalized as:

\[\text{Given } S \text{ and } \mathcal{P}_{S}, \text{ determine } \mathcal{T}_{R, S}^{Predicted}\]where \(( \mathcal{P}_{S} )\) is the set of all prefixes of \(( S )\). This decomposes into a set of string classification tasks, with the turn incrementally presented as prefixes. Each TRP is classified independently, focusing on prior words in the turn for prediction.

Evaluation Metrics

F1 Score

The F1 score balances precision and recall, providing a single metric for model accuracy in predicting TRPs. This is particularly important given the imbalance between intervals with and without TRPs. The F1 score is calculated as:

\[F1 = 2 \cdot \frac{\text{Precision} \cdot \text{Recall}}{\text{Precision} + \text{Recall}}\]Free-Marginal Multirater Kappa

This statistic measures the agreement between multiple raters (models and human participants) on TRP identification, providing insights into model consistency and alignment with human judgments.

The kappa statistic for all intervals \((k_{free}^{all})\) is calculated as:

\[k_{free}^{all} = \frac{[\frac{1}{Nn(n-1)} \sum_{i=1}^{N}\sum_{j=1}^{K} n_{ij}^2 - Nn] - \frac{1}{K}}{1 - \frac{1}{K}}\]where \(( N )\) is the number of intervals, \(( n )\) is the number of raters, \(( K )\) is the number of categories, and \(( n_{ij} )\) is the number of raters who assigned interval \(( i )\) to category \(( j )\).

The kappa statistic for true TRP intervals \((k_{free}^{true})\) is calculated similarly but only considers intervals where a TRP occurred.

Normalized Mean Absolute Error (NMAE)

This metric evaluates how close model predictions are to actual TRPs, considering the variance in human responses. The NMAE is calculated as:

\[\text{NMAE} = \frac{1}{K} \sum_{i=1}^{K} d_{i,j}^{S}\]where \(( d_{i,j}^{S} )\) is the minimum absolute distance, in terms of the number of intervals, between an interval in which a response was predicted \((\mathcal{T}_{R,S}^{Predicted})\) and the closest interval with a true TRP \((\mathcal{T}_{R,S}^{Participants})\).

Normalized Mean Square Error (NMSE)

This metric also evaluates the closeness of model predictions to actual TRPs but penalizes larger errors more heavily. The NMSE is calculated as:

\[\text{NMSE} = \frac{1}{K} \sum_{i=1}^{K} (d_{i,j}^{S})^2\]where \(( d_{i,j}^{S} )\) is defined as above.

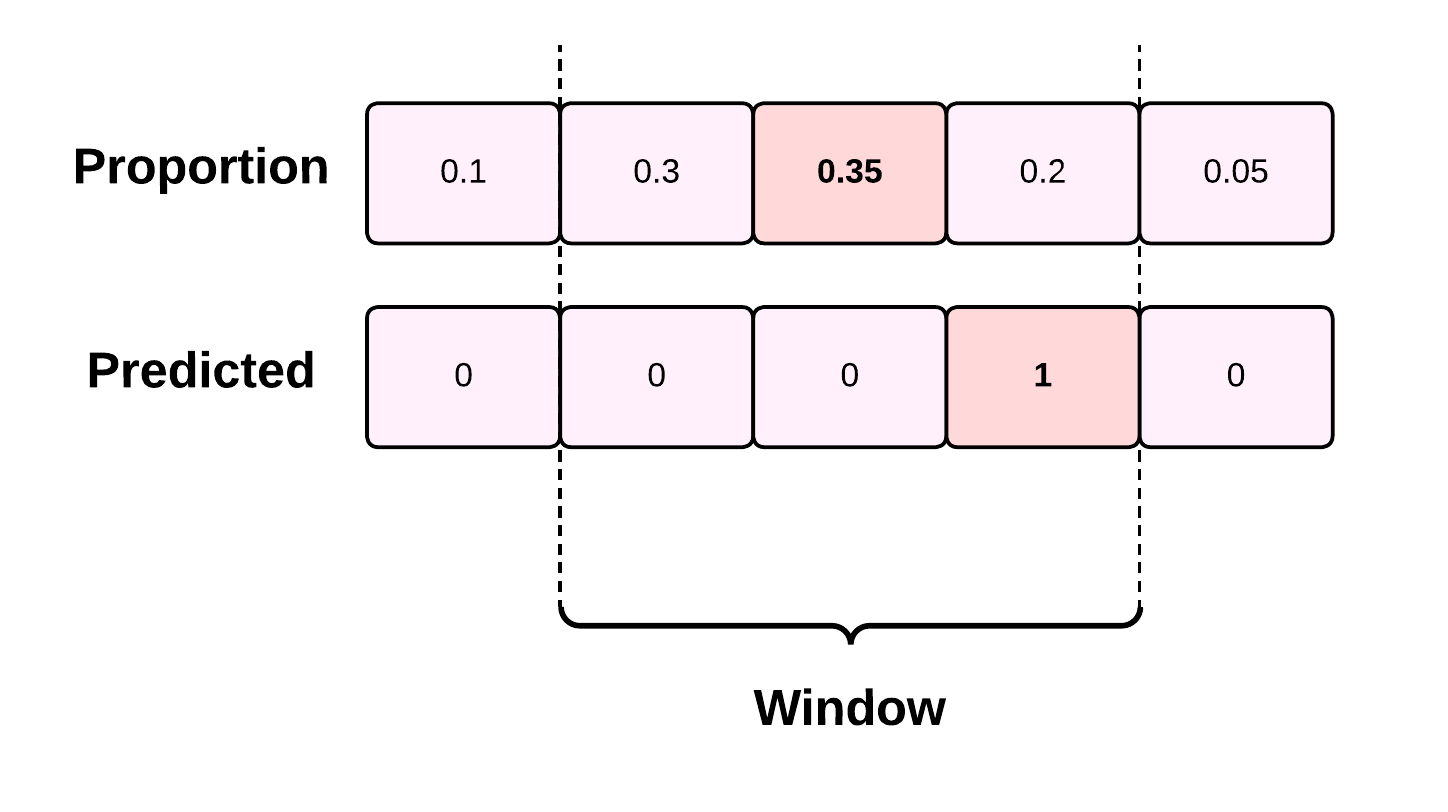

Density-Adjusted NMAE

This metric further adjusts the NMAE by considering the density of participant responses around TRPs. It accounts for the proportion of responses within a specified window size around each true TRP. The density for a window size ( W ) is calculated as:

\[\text{Density}_{S}(I_{ij}, W) = \sum_{l=-\frac{W}{2}}^{\frac{W}{2}} I_{i+l,j+l}\]The density-adjusted NMAE is then calculated as:

\[\textit{NMAE}_{DA} = \frac{1}{K} \sum_{i=1}^{K} \frac{d_{i,j}^{S}}{\text{Density}_{S}(I_{ij}, W)}\]These metrics provide a comprehensive evaluation of the model’s ability to predict TRPs accurately, considering both overall performance and specific conditions where human agreement on TRP locations is high.

Experiments and Results

We evaluated multiple large language models, including GPT-4 Omni, Phi3, Gemma2, Llama3, and Mistral, on their performance in the within-turn TRP prediction task. Each model was tested under two conditions: “participant” (simplified instructions) and “expert” (detailed theoretical context). The results, as shown below, highlight the variability in performance across models. Despite GPT-4 Omni being the strongest performer overall, it still demonstrates limitations in accurately predicting turn-relevance points (TRPs), underlining the challenges in adapting LLMs to nuanced conversational tasks.

| Model | Condition | Precision | Recall | F1 Score | $$k_{free}^{all}$$ | $$k_{free}^{true}$$ | NMAE | NMSE | $$\textit{NMAE}_{DA}$$ |

|---|---|---|---|---|---|---|---|---|---|

| GPT-4 Omni | Participant | 0.153 | 0.153 | 0.152 | 0.891 | 0.325 | 0.286 | 3.140 | 11.280 |

| GPT-4 Omni | Expert | 0.122 | 0.185 | 0.147 | 0.860 | 0.201 | 0.253 | 5.360 | 16.560 |

| Phi3:3.8b | Participant | 0.034 | 0.923 | 0.067 | -0.671 | -0.417 | 0.192 | 5.189 | 16.430 |

| Phi3:3.8b | Expert | 0.031 | 0.083 | 0.045 | 0.779 | 0.001 | 0.251 | 8.648 | 21.640 |

| Phi3:14b | Participant | 0.035 | 0.326 | 0.063 | 0.374 | -0.157 | 0.202 | 6.280 | 18.060 |

| Phi3:14b | Expert | 0.039 | 0.057 | 0.046 | 0.845 | 0.137 | 0.232 | 5.091 | 16.920 |

| Gemma2:9b | Participant | 0.028 | 0.285 | 0.052 | 0.322 | -0.088 | 0.224 | 8.059 | 20.770 |

| Gemma2:9b | Expert | 0.022 | 0.178 | 0.039 | 0.441 | -0.087 | 0.239 | 8.784 | 22.180 |

| Gemma2:27b | Participant | 0.033 | 0.490 | 0.063 | 0.034 | -0.387 | 0.194 | 5.260 | 16.650 |

| Gemma2:27b | Expert | 0.039 | 0.307 | 0.068 | 0.459 | -0.232 | 0.206 | 5.790 | 17.560 |

| Llama3.1:8b | Participant | 0.014 | 0.082 | 0.025 | 0.618 | -0.106 | 0.265 | 9.815 | 24.320 |

| Llama3.1:8b | Expert | 0.020 | 0.077 | 0.032 | 0.692 | -0.071 | 0.268 | 9.947 | 24.420 |

| Mistral:7b | Participant | 0.033 | 0.804 | 0.064 | -0.517 | -0.413 | 0.194 | 5.168 | 16.510 |

| Mistral:7b | Expert | 0.037 | 0.266 | 0.065 | 0.498 | -0.222 | 0.190 | 5.136 | 16.110 |

Discussion

Our findings reveal that LLMs underperform in predicting TRPs, even with extensive pre-training. This limitation impacts the development of SDS, which rely on accurate turn-taking for user satisfaction and communicative accuracy. The inability of LLMs to effectively use their linguistic knowledge for TRP prediction underscores the need for specialized training on spoken dialogue data.

Our study provides several insights:

- Empirical Data: The dataset of human-detected TRPs in natural conversations offers a valuable resource for evaluating and improving LLMs.

- Task-Specific Challenges: High performance on written-language benchmarks does not translate to spoken language tasks, highlighting a critical bottleneck.

- Future Directions: Fine-tuning on conversational data, incorporating non-verbal cues, and optimizing prompts could improve model performance.

Despite their capabilities, LLMs currently underperform in predicting TRPs necessary for smooth turn-taking. Our study highlights the need for better training on spoken dialogue data and offers a foundation for future improvements in conversational agents. The dataset we created will enable researchers to explore ways to enhance models’ performance on this crucial task. Future research could explore incorporating non-verbal cues, analyzing LLM-reported reasoning for TRP predictions, and optimizing prompt designs. Additionally, fine-tuning LLMs on conversational data could enhance their performance in this task.

Acknowledgements

This research was supported in part by Other Transaction award HR00112490378 from the U.S. Defense Advanced Research Projects Agency (DARPA) Friction for Accountability in Conversational Transactions (FACT) program.

We would also like to acknowledge Grace Hustace for her contributions to data collection and processing, and Dr. Julia Mertens for her input during early-stage discussions, both of whom are affiliated with the Human Interaction Lab at Tufts University.